Introduction to Live Data Streaming Pipelines

In today’s rapidly evolving data landscape, the need for immediate and actionable insights has become paramount. This shift has led to the emergence of live data streaming pipelines, a pivotal concept that allows organizations to process data in real time as it flows into their systems. Unlike traditional batch processing methods, which analyze data at specific intervals, live data streaming pipelines continuously ingest, process, and analyze data, enabling stakeholders to make timely decisions based on the most current information available.

At the core of live data streaming pipelines is the ability to handle vast volumes of data generated from various sources, including IoT devices, social media platforms, and enterprise applications. As organizations increasingly rely on real-time data to enhance operations, optimize customer experiences, and drive innovation, the significance of these pipelines cannot be overstated. They play a crucial role in enabling businesses to stay competitive in an era where every second counts.

The primary objective of a live data streaming pipeline is to facilitate immediate insights and facilitate responses that can be acted upon without delay. For instance, a retail company can utilize live data streaming to monitor inventory levels and sales patterns, enabling proactive restocking strategies that maximize revenue potential. By not only capturing real-time data but also processing it efficiently, organizations can gain a holistic view of their operational dynamics and quickly adapt to changing circumstances.

Furthermore, live data streaming pipelines can enhance predictive analytics capabilities. By continuously analyzing incoming data, organizations can identify trends earlier and optimize their strategies accordingly. In conclusion, the integration of live data streaming pipelines into organizational frameworks is transforming how data is utilized, setting the stage for a future driven by instantaneous insights and agile decision-making.

Differences Between Live Data Streaming and Batch Processing

The fundamental distinction between live data streaming and batch processing lies in their approaches to data handling. Live data streaming refers to the continuous flow of data, processed in real-time as it arrives. This method is crucial for applications that require immediate responses, such as stock trading platforms or social media monitoring tools. In contrast, batch processing involves accumulating a large set of data over a defined period, which is then processed all at once. This could be seen in payroll processing systems or customer activity reports.

One of the most significant differences between these two methods is the frequency of data updates. In live data streaming, data is updated continuously, providing up-to-the-minute insights and allowing organizations to react swiftly to emerging trends. This real-time capability is invaluable for businesses that operate in fast-paced environments where timely decisions are critical. On the other hand, batch processing typically operates on a scheduled basis, often daily or weekly, making it suitable for less time-sensitive applications.

Furthermore, the response time associated with each method varies greatly. Live data streaming systems are built for low-latency processing, ensuring that data is available for analysis almost instantaneously. This immediacy empowers organizations to make data-driven decisions in real-time. Batch processing, while efficient for large datasets, usually comes with higher latency, as the entire batch needs to be collected and analyzed before insights can be generated.

In terms of data analysis, live data streaming supports continuous analytics, enabling organizations to extract insights as data is ingested. This is particularly advantageous for scenarios such as fraud detection, where anomalies must be identified instantly. Conversely, batch processing is effective for retrospective analysis, where insights derived from historical data can drive strategic decisions.

Key Characteristics of Live Data Streaming Pipelines

Live data streaming pipelines are essential frameworks for managing and processing data as it is generated. Several key characteristics define these pipelines, ensuring their effectiveness in real-time applications. First and foremost is low latency, which enables rapid data transfer and processing. In many use cases, such as financial trading or online gaming, even a few milliseconds of delay can have significant consequences. Therefore, minimizing latency is crucial for maintaining the competitive edge required in industries that rely heavily on quick, data-driven decisions.

Another defining feature of live data streaming pipelines is real-time processing. This capability allows organizations to analyze and act on data almost instantaneously, resulting in improved decision-making and operational efficiency. For instance, businesses can instantly monitor user behaviors on their platforms and adjust marketing strategies or product offerings accordingly. Real-time processing empowers organizations to stay agile, respond to market changes, and better serve their customers.

Scalability is also vital in the context of live data streaming. As data volumes grow, the ability to scale infrastructure accordingly ensures that systems can accommodate increased loads without sacrificing performance. A well-designed streaming pipeline can manage large numbers of simultaneous data sources, facilitating comprehensive analysis and action based on extensive data sets. This scalability is not only about handling larger volumes but also about adapting to varying data sources, which brings us to the next characteristic: integration capabilities.

Integrating various data sources, whether on-premises or in the cloud, is essential for a flexible streaming pipeline. Organizations often draw data from diverse systems, including sensors, user interactions, and databases. An effective live data streaming pipeline must seamlessly accommodate these diverse sources to provide a holistic view of the data landscape.

Finally, fault tolerance is a critical feature of live data streaming pipelines. Systems must be resilient, ensuring that they can recover from interruptions without losing data integrity. This characteristic is vital for maintaining trust in analytics outputs and ensuring operational continuity, especially when real-time insights are paramount.

Components of a Live Data Streaming Pipeline

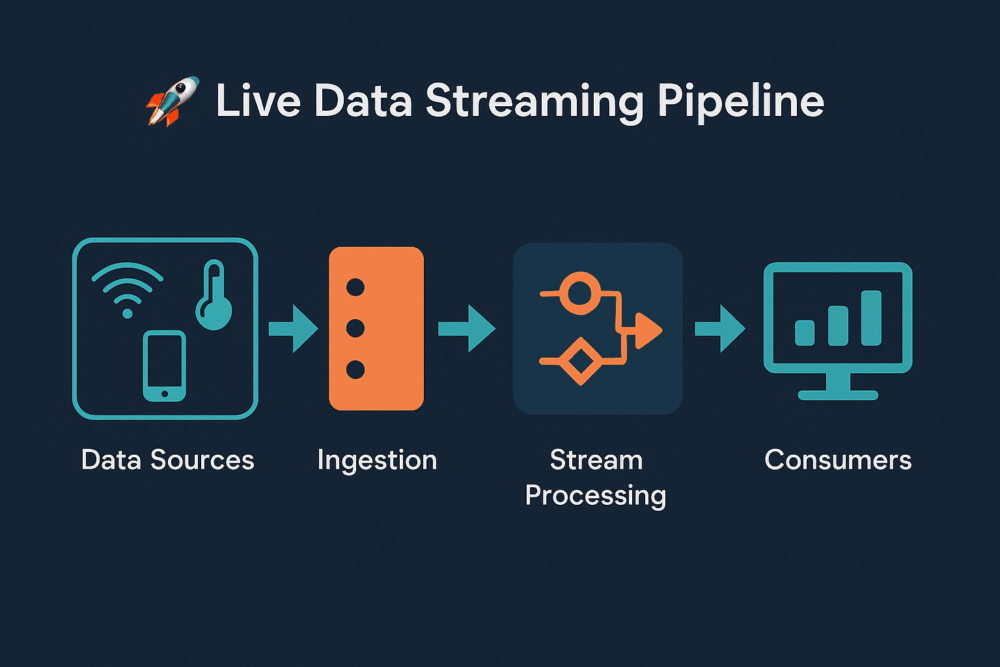

A live data streaming pipeline is a system designed to continuously ingest, process, and deliver data in real-time. This type of architecture is composed of several critical components, each of which plays a vital role in the efficiency and effectiveness of data delivery and analysis. Understanding these components helps to clarify how they interact to facilitate uninterrupted data flow.

The first essential component is the data sources. Data sources can vary widely, including IoT devices, web applications, social media feeds, or backend databases. They serve as the origin points from where raw data is generated and collected. These sources can provide structured, semi-structured, or unstructured data, which is crucial for determining the processing methods to be applied later in the pipeline.

Next is the stream processing engine. This component is responsible for ingesting the data streams from various sources and processing them in real-time. Popular stream processing engines, such as Apache Kafka, Apache Flink, and Apache Spark Streaming, enable organizations to apply transformations, filtering, and aggregations immediately as the data flows through the system. This real-time processing ensures that valuable insights can be obtained swiftly, making it possible to act on data as it is generated.

Another component integral to the pipeline is storage solutions. Unlike batch processing systems that store data at intervals, live streaming pipelines require storage that can handle continuous data inflows. Solutions such as cloud storage (e.g., AWS S3, Azure Blob Storage) or high-throughput databases (e.g., Cassandra, MongoDB) are utilized for this purpose. These storage solutions facilitate both immediate access and long-term data retention, ensuring that data remains available for analysis over extended periods.

Lastly, the visualization tools provide the means to present processed data in a user-friendly format. Tools like Tableau, Grafana, or custom dashboards allow stakeholders to monitor real-time metrics and make informed decisions based on the insights derived from live data streams. Through standardized visualizations, complex data can be translated into actionable information effectively.

Real-Life Examples of Live Data Streaming

Live data streaming has revolutionized various industries, offering remarkable solutions that enhance operational efficiency and improve user experiences. One prevalent application is in social media platforms, where data feeds are constantly updated in real-time. Users receive immediate updates about posts, comments, and messages, facilitating instantaneous interaction and engagement. For instance, platforms like Twitter utilize data streaming to deliver live tweets, ensuring users stay informed of trending topics as they unfold.

Moreover, the Internet of Things (IoT) is a prime sector benefiting from live data streaming. Smart devices collect and transmit data continuously, allowing users to monitor systems like home security or temperature from anywhere. A tangible example is smart home systems that enable homeowners to stream data from security cameras or smoke detectors, ensuring real-time updates and alerts regarding their property’s safety.

In the financial sector, live data streaming is crucial for high-frequency trading and market analysis. Traders rely on streaming data to make swift decisions based on real-time stock prices and market trends. Numerous financial institutions employ data streaming technology to provide clients with up-to-the-minute information, helping them respond effectively to market fluctuations and news events that could impact stock valuations.

Customer service chatbots also implement live data streaming to enhance user interactions. These bots gather and analyze data in real time to provide personalized responses to customer inquiries. For example, when a user inquires about product availability, the chatbot accesses live inventory data, providing immediate and relevant information that improves customer satisfaction and streamlines the buying process.

Overall, these examples illustrate the extensive application of live data streaming across sectors, emphasizing its role in driving efficiency and enhancing user engagement. As businesses continue to harness the potential of streaming data, the impact of this technology will likely expand even further in the future.

Live Data Streaming in Business Applications

Live data streaming has become a fundamental component in various business applications, offering organizations the ability to process and analyze data in real-time. Industries such as finance, healthcare, retail, and telecommunications are leveraging this technology to enhance decision-making, optimize operational processes, and improve customer engagement. In finance, for instance, real-time data streaming is instrumental in fraud detection and risk management, allowing institutions to monitor transactions as they occur and respond swiftly to suspicious activities. By analyzing data streams, financial institutions can make informed, timely decisions that mitigate risks and enhance security.

In the healthcare sector, live data streaming is transforming patient care by enabling real-time monitoring of patient vitals and health records. Hospitals employ streaming data to track and analyze health metrics continuously, leading to better patient outcomes and more efficient resource allocation. For instance, streaming data from wearable devices can alert healthcare providers to any anomalies, allowing for immediate intervention and personalized care.

Retail businesses also benefit significantly from live data streaming by utilizing it to enhance customer experience and streamline inventory management. Retailers analyze consumer behavior in real-time, allowing them to tailor marketing strategies and optimize stock levels efficiently. This practice improves not only sales outcomes but also customer satisfaction, as shoppers receive more relevant products and offers.

In telecommunications, live data streaming aids in network performance monitoring and management. Telecommunications companies can utilize streaming analytics to detect service disruptions or inefficiencies immediately, allowing them to resolve issues proactively and maintain seamless connectivity for customers. This capability is crucial in a digital age where downtime can result in significant revenue loss and customer dissatisfaction.

In conclusion, the integration of live data streaming across various business applications empowers organizations to make informed decisions, enhance customer engagement, and improve operational efficiency, setting a foundation for competitive advantage in an increasingly data-driven marketplace.

Challenges and Considerations for Implementing Live Data Streaming

Implementing live data streaming pipelines poses a variety of challenges that organizations must navigate to ensure successful deployment and functioning. One of the primary concerns is ensuring data quality and consistency. As real-time data is processed, it is crucial to maintain accuracy and reliability. Inconsistent data can lead to erroneous insights and decisions, undermining the value of the data pipeline. Organizations should implement data validation measures and quality checks to mitigate such risks.

Managing throughput and scalability presents another significant challenge. Live data streaming often involves handling vast amounts of data at high velocity, which can strain existing infrastructure. Organizations must design their architecture to be robust and flexible, allowing for scalability as data volumes grow. This may involve choosing the right technologies, such as distributed processing frameworks, to effectively manage high-throughput scenarios.

Security concerns are paramount in the realm of live data streaming. Streaming data often includes sensitive information, necessitating the implementation of robust security protocols to protect against unauthorized access and breaches. Organizations should consider encryption, access control mechanisms, and consistent monitoring of data flows to safeguard the integrity and confidentiality of their streaming data.

Lastly, the need for skilled personnel cannot be overlooked. The complexity of managing live data streaming architectures requires expertise in various domains, including data engineering, software development, and operations. Organizations must invest in training and potentially hiring experienced professionals who can navigate the intricacies of streaming technologies effectively.

Understanding and addressing these challenges is essential for any organization looking to implement live data streaming pipelines successfully. A strategic approach that focuses on data quality, scalability, security, and personnel will lay the foundation for an efficient and effective streaming ecosystem.

Future Trends in Live Data Streaming

The landscape of live data streaming has been rapidly evolving, influenced by various technological trends. One of the most significant advancements is the integration of artificial intelligence (AI) and machine learning (ML) into streaming pipelines. These technologies are enabling real-time data ingestion and processing, allowing organizations to derive insights more efficiently than ever before. By leveraging AI algorithms, businesses can analyze streaming data in real-time, resulting in improved decision-making processes and more responsive operations.

Additionally, edge computing is becoming increasingly crucial for live data streaming. This paradigm shift enables data processing closer to the data source, which significantly reduces latency and optimizes bandwidth usage. By deploying data streaming solutions at the edge, organizations can enhance the performance of their applications while ensuring that real-time data access is both reliable and efficient. This trend is particularly relevant for industries that rely heavily on IoT devices and require immediate insights from the data generated at remote locations.

Furthermore, advancements in cloud services are reshaping the capabilities of live data streaming. Cloud-native architectures are allowing businesses to rapidly scale their streaming solutions, accommodating growing data volumes and increasing the complexity of analytics. With the rising demand for robust data processing capabilities, cloud platforms are continuously enhancing their services, providing organizations with better tools for managing and analyzing streaming data. These developments are paving the way for innovative applications across various sectors, such as finance, healthcare, and entertainment, where real-time data insights can significantly enhance user experiences and operational efficiencies.

In conclusion, as AI, machine learning, edge computing, and cloud advancements continue to evolve, they will play a pivotal role in transforming live data streaming pipelines. Embracing these trends can empower organizations to maximize the effectiveness of their data infrastructures, thus unlocking new opportunities and driving growth in the digital age.

Conclusion: The Importance of Embracing Live Data Streaming

As organizations navigate the complexities of an increasingly data-driven landscape, the importance of adopting live data streaming cannot be overstated. Throughout this guide, we have explored the technical foundations, benefits, and application scenarios of live data streaming pipelines. From facilitating real-time decision-making to enhancing operational efficiency, the capabilities of live data streams have proven to be transformative.

By leveraging live streaming technologies, organizations can gain instantaneous insights into their operations, customer behaviors, and market trends. This enables them to respond swiftly to dynamic environmental changes, thereby gaining a strategic advantage over competitors. Moreover, the accessibility of real-time data fosters a culture of data-informed decision-making across departments, aligning teams towards common goals and objectives.

Organizations that embrace live data streaming can implement proactive measures, rather than simply reactive strategies. For instance, real-time analytics can aid in predictive maintenance, customer support, and supply chain optimization. This shift from historical data analysis to real-time insights empowers businesses to innovate and improve customer experiences, ultimately driving profitability.

To embark on their journey into live data streaming, organizations should start by assessing their existing data infrastructure and identifying specific pain points that real-time data can address. Investing in reliable data streaming solutions and fostering a culture that values real-time analytics will be crucial steps towards integration. Additionally, training employees on these technologies will help them harness the full potential of live data, ensuring a smoother transition into this paradigm.

In conclusion, live data streaming represents a paradigm shift for organizations aiming to thrive in a fast-paced environment. By adopting and leveraging the capabilities of live data pipelines, companies can optimize operations, enhance customer satisfaction, and ultimately achieve their strategic goals more efficiently than ever before.